Reflections on Friday’s “Rant”

In my last post, I went on a bit of a “rant” against my wireless provider, something I rarely do on this blog in public, and almost never by name. But the breakdowns in everything from how the CSR handled the specific transaction involved, to the underlying design of their processes and enabling technologies, generated such a wealth of fodder on “how NOT to run a CS function” that I really felt compelled to let loose.

Today, there are so many examples of “poor” customer service littered all over the blogosphere and twitter-verse that most of you probably get tired of reading about them (I know I do). But sometimes “venting” a bit on paper helps me get over a bad experience, and since today’s writing tablet is my blog, I figured “what the heck”… As it turns out, I did sleep pretty well after I got that rant out of my system, so apparently, the “therapy” worked for me.

That notwithstanding, I was fairly certain that this post would go largely unnoticed, and that I would awake on Saturday morning with a new attitude, ready to spend my usual “bogging hour” generating some fresh now topics for the coming week (which were bound to be more upbeat and forward looking). At least, that was the intent.

As with most things however, my expectations were once again incorrect. What I thought was a stupid little “throw away” post on my Friday afternoon CS experience generated about two times the volume of readership than any of my posts in the last several months. While I’d like to think it was “earned” based on good preparation, research, and authorship, the facts are that this was one that had zero preparation, was written on pure emotion and adrenalin, and was probably one of the most “long winded” posts I’d written in a while.

Learning from a bad experience…”Delight the Customer”!!!

Reading through the comments, and reflecting a bit on the post, I realized that I struck quite a nerve in people who are both passionate about Customer Service, and who (like all of us) have had theie share of negative experiences. The responses I got ranged from “resignation” (this sucks, but unfortunately, ” it is what it is”), to comparative reflection on companies who do in fact “get it”, and a sense of anticipation that type of excellence will once again return to this industry. In fact, reading and thinking about some of the positive examples of CS in our society (both from today and the “good old days”) was little like “comfort food” for me in healing the pain I endured on Friday. What was a negative post, created positive energy. And that was good.

However, amidst all of those gyrations, I couldn’t help but reflect on words that kept popping up as I read the article and reader comments, which mostly revolved around the notion of “delighting the customer” and “exceeding expectations“. These words seem to show up often just after we juxtapose a bad customer experience with a really good example of what it should look like. Phases like “CS should look to (fill in the blank) company, and how they Delight the Customer (instead of really mucking it up like they did)” are very common in these situations.

If we could only have providers that “Delight the Customer”!!!…Those words make us feel hopeful that we someday will return to the good old days and the types of companies who really “got it”.

In the late 80’s and 90’s, we were trained to think differently about Customer Service, and follow in the footsteps of what I’ll call the “CS legends” of that era. And those of us who started our careers in that era, remember all too well those iconic images of companies “going the extra mile”, often with some kind of dramatic, back-breaking demonstration of Customer Service “heroics”. Most of us probably remember the old FedEx example from the mid 90’s where an employee hired a helicopter to get a package to the recipient (I can’t remember if it was an organ donation, or a 10 year old’s package from Santa, but I digress :)). Whether it’s that anecdote, or one from a current era, that type of “over delivery” has become somewhat of an accepted standard for what “best practice” should look like, and the basis around which some of us continue to shape our expectations.

While I don’t excuse experiences like I had on Friday, I must admit that I did begin to reflect on, and actually question what “the standard” should be. What should have been my expectation? What should have been their goal for delivery against that expectation? And if their goal was to “delight the customer”, what should that look like in everything from the process to the behavior of the rep himself?

A New Standard: “Delighting the Customer” and “Exceeding Expectations”?

Some (counterintuitive) perspectives from the “old school”…

I recall working with a older (and wiser) colleague of mine about 15 years ago (he’s even older and wiser now!), who told me that the goal for Customer Service should be to MEET, not exceed the customer’s expectation. And as a relatively young and unseasoned professional, my reaction was something like bull #$@*!

Heck, I probably recited that same Fed Ex story, along with every other example that was floating around in the B-school literature and CS journals in those days. Back then, I would have rationalized my response by telling myself that this guy was “an engineer “after all (no offense to you engineers out there, but in the industry I was in at the time, engineers had developed a reputation among the “Customer Ops side” of the business of being “old school” thinkers and “barriers to change” (an obvious error in judgement by those in CS, but reality nonetheless). Why?, because that industry, which was going through unprecedented change and feverish levels of competition, had developed two competing cultures. Engineers on one side who were literally “keeping the lights on”, and the Customer side of the organization (Sales, PR, Customer Service, Marketing, etc.) who were often flaunting their MBA’s, B-school pedigrees, and FedEx case studies around the C-suite, with considerable levels of success. “Pragmatists and doers”, versus the “ivory tower thinkers”. Always a recipe for disrespect of alternate views, and perhaps a subject for a future post.)

At the time, I remember thinking to myself, “this guy really has his head in the sand “(or somewhere less desirable!!). His words were so foreign to me, and it sounded so ‘ass backwards’. After all, in addition to all of the new “feel good” CS legends and case studies, surely there were the old adages of “the customer is king” and “the customer is always right” that he should have been tuned into. So how could anyone think that “exceeding expectations” could be ANYTHING BUT “universal truth“?

Well, we’ve both moved on from there. We’re both older and wiser on many issues, and I do enjoy seeing him occasionally and sharing a good cigar. While we’ve never really talked much more about that specific exchange, working together in the years after showed me enough about what he really meant.

What he was getting at was this: that we, as service providers spend so much time trying to beat the standard that we often miss it entirely. He was also saying that when we try and envision what it will take to truly “delight the customer”, we often get it wrong. That is, we often don’t take the time to know what will delight the customer or not. And if we get it wrong, it becomes a slippery slope.

To “Meet” or “Exceed”?

While you may agree or disagree with his perspective, or its application in the real world, there is something to reflect on here.

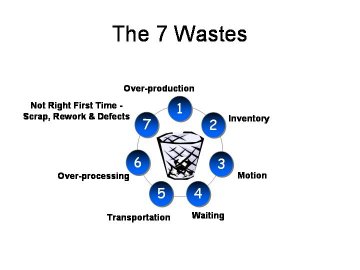

But what happened on Friday had nothing to do with failing to “delight me”, or “failure to over-deliver on anything”. What it was, was an abysmal failure to meet even the most basic expectations. And now that I look back on it, many of the things the company may have “thought” it was doing to “delight me” (new kiosks, new ‘sign in process’, flashy technology, etc), were actually viewed by me a “background noise” to the transaction I was there to take care of.

Fact is, the simple act of meeting basic expectations can, and often does, drive BIG success- both in terms of magnitude and sustainability.

Think about McDonalds. Same (or very close) experience- Every product, every store, every time! They thrive on CONSISTENCY of the core product, and very little in my view on “exceeding expectations” and “the delight factor” (at least in the context of the “legends” I referenced earlier). Most people that frequent McDonald’s expect what they expect, and get it consistently.

Southwest Airlines is another good example. Come on!!–An airline that actually made money when everyone else was losing their tails, …and they don’t even have first class cabins or in-flight meals? That’s right. Because they MEET expectations that they set. No surprises (which in my view is a bigger key to Customer Satisfaction than over-delivery)

Of course, I fully understand that some of those who preach the principle of “delighting the customer”, are really saying the equivalent of “over deliver on the promise you make (whatever that promise is) and then give that “little extra touch” (or what we used to call in New Orleans where I grew up, “lagniappe”– which in the Creole dialect means “a little bit extra”).

In fact, I believe that is exactly what both Southwest and McDonalds do. Not that they set the bar low, but they set it commensurate to the market they serve and target, and then service exactly to that standard. Then, perhaps where opportunities present themselves, they surround that experience with small touches of the “extras” in the way of smiles, humor, and courtesy. It’s all relative to the standard you set. And consistently delivering against your standard is a sure way to profitability.

Over delivery sometimes backfires…

Unfortunately, there are many practitioners, trainers and consultants that still interpret the “delight factor” as the type of dramatic heroics exhibited by the old legends of the game. The problem with this aspiration, no matter how noble, is that it often takes your eye off the ball so to speak, and distracts attention from meeting the core expectation. And that’s the main lesson I took away from my old colleague.

While “exceeding expectations” may score you some points, it can also be a slippery slope for a few reasons. First, you may not know what that elusive “delight factor” or threshold is for a specific customer or demographic. It’s hard for even the best companies to “get right” even with decent market intelligence, and you usually don’t have a lot of “practice shots” to test your hypotheses without experiencing some fallout. More importantly, over-delivery on something that doesn’t matter to customers, AT BEST loses you a few bucks, and at WORST serves as the type of background noise mentioned above that will only frustrate a customer more. And with the state of our Market Research and survey effectiveness these days (see comments on the above referenced post), you have more of a chance getting it wrong than right.

As an example, I am reminded of two separate instances where my flight left 15 minutes early, clearly “delighting” a few, but not me and some other passengers who were stuck in security both times. And yes, I was an hour early in both instances. Thankfully, it was before blogging so ya’ll didn’t have to endure that rant. It was ugly, I can assure you.

Over delivery can also cost you dearly. Not always, but every investment in a customer is likely to have a point of diminishing returns. And in this economic climate, you need to make tough choices on how you will differentiate, compete and win. Often, competing on core product and core delivery is a winner.

One again, Wisdom Wins…

Getting back to my old colleague for a minute. The fact that he was an engineer (a profession I have since learned to respect greatly) did give some insight (albeit a few years later) as to why he believed what he did. Of course, some of it was based on his experience with customers directly over the years. But some of it was based on his own history.

You see, if engineers get it wrong, delivering outside of spec on either side, then it’s usually “lights out” (figuratively and sometimes literally). I would suspect the same with accountants, airline pilots, and any other industry where meeting expectation is the first and often only objective. So it’s a philosophy that shapes them, and to realize that will help us all understand their words and perspectives better. But the reality is, that none of them would probably take issue with the “delight factor”, but they will also never put that as priority 1. And I, for one, don’t want them to.

Understand the expectation, set the bar, and deliver on it. If, after all of that, you’ve got a little “lagniappe” left to offer, have at it…

Author: Bob Champagne is Managing Partner of onVector Consulting Group, a privately held international management consulting organization specializing in the design and deployment of Performance Management tools, systems, and solutions. Bob has over 25 years of Performance Management experience and has consulted with hundreds of companies across numerous industries and geographies. Bob can be contacted at bob.champagne@onvectorconsulting.com

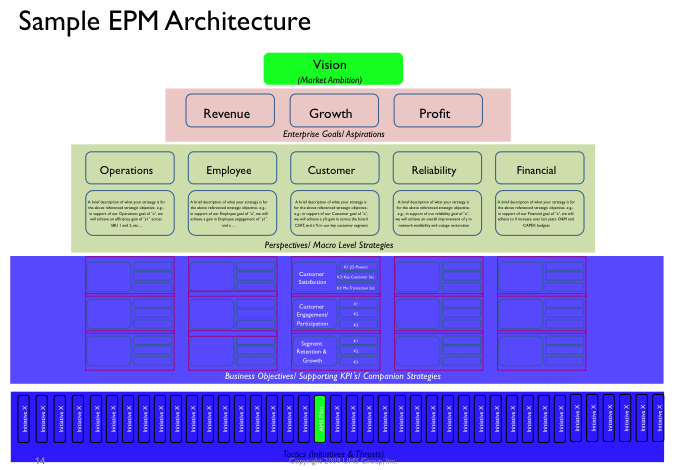

One of the questions I get asked often by my clients is “just how many KPI’s and metrics are enough for effective Performance Management to occur?”

One of the questions I get asked often by my clients is “just how many KPI’s and metrics are enough for effective Performance Management to occur?”